TL;DR (Executive Summary)

- 2025 is the inflection point for QA. Traditional automation alone can’t keep up with multi-stack applications, faster release cadences, and rising compliance scrutiny.

- AI testing introduces mechanisms, not magic. From auto-generation of candidate cases to diff-aware prioritization, self-healing scripts, and flake clustering, AI augments QA where scripts break down.

- Continuous quality moves inside CI/CD. Tests are generated, executed, and stabilized directly in GitHub, GitLab, Jenkins, or Azure DevOps pipelines—tightening feedback loops without slowing delivery.

- Governance is non-negotiable. Synthetic data, masking, model logs, and audit trails ensure AI-generated tests are compliant with PII/PHI regulations and enterprise audit standards.

- Qadence operationalizes this shift. By combining compiler-grade analysis with Gen AI agents and human-in-the-loop reviews, Qadence reduces cycle time, cuts flakiness, and gives QA leaders measurable control.

Introduction: The QA Turning Point

For most enterprises, QA has reached a breaking point. Test automation coverage plateaus around 20-30%, while the complexity of distributed systems keeps climbing. Regression suites balloon, pipelines slow, and release confidence erodes as flaky tests and brittle scripts consume more engineering hours than they save.

At the same time, board-level priorities have shifted. Velocity and reliability are measured by DORA metrics - deploy frequency, lead time, change failure rate, and recovery time. QA teams are now directly accountable for these outcomes, not just for “finding bugs.”

This is where AI testing marks a turning point. It doesn’t “replace QA” but reshapes it:

- Generation becomes proactive. Candidate cases are created pre-sprint from requirements, OpenAPI specs, and traffic data.

- Execution becomes selective. Diff-aware prioritization ensures only relevant tests run per commit.

- Stability improves. Self-healing scripts and flake clustering reduce false failures that block pipelines.

- Governance scales. Automated logs, reviews, and synthetic data flows keep compliance intact.

The net effect is a QA function that operates inside the delivery pipeline, not alongside it, accelerating releases while raising confidence. This paper unpacks the mechanisms, governance models, and CI/CD patterns that will define QA in 2025, and shows how platforms like Qadence make them real at enterprise scale.

What Is AI Testing?

AI testing refers to a class of QA workflows in which machine-learned models, agents, and feedback loops drive key testing functions (generation, prioritization, execution, stabilization, and maintenance), rather than relying solely on static, hand-coded scripts.

It doesn’t mean “push a button and QA vanishes.” Instead, it means the test infrastructure becomes adaptive and context-aware.

Key dimensions of AI testing

- Candidate Generation: From requirements (user stories, acceptance criteria), API specifications (OpenAPI, GraphQL schema), or production traffic logs, AI models propose potential test cases, both positive and negative.

- Impact / Diff-aware Prioritization: Instead of re-running full suites, the system selects only the subset of tests relevant to the change, based on model-augmented dependency graphs and historical failure signals.

- Self-Healing & Flake Management: When tests fail due to non-deterministic shifts (CSS changes, timing, DOM rewrite), models detect root causes, adjust locators or wait strategies automatically, and cluster flaky patterns to suppress false negatives.

- Feedback & Adaptation: The AI system leverages real failure data and human reviews to iteratively refine prompts, test selection thresholds, and healing logic.

- Governance & Observability: Every generated test, model version, training input, and fix event is logged. Data controls (masking, synthetic data) and approval gates ensure compliance, auditability, and trust.

In short: AI testing blends human curation with machine intelligence, making testing pipelines leaner, more maintainable, and less brittle at scale.

In a traditional QA setup, tests are manually scripted, updated post-release, and prone to drift as applications evolve.

In an AI-led system, like those operationalized by Qadence, tests are generated, selected, healed, and governed directly inside the delivery pipeline, closing the loop between development and quality.

One empirical anchor: a study of LLMs generating unit tests (from function signature + sample usage) achieved a median 70.2% statement coverage and 52.8% branch coverage, outperforming prior feedback-directed heuristics.

That doesn’t make them perfect, but it proves that generative models already offer meaningful coverage uplift, if paired with governance and human validation.

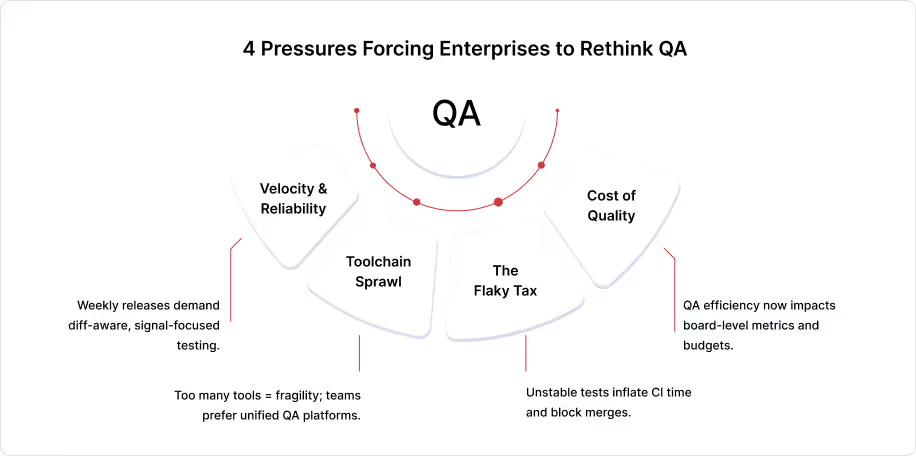

Why Companies Are Replacing Traditional QA in 2025

Most teams are short on confidence at speed. Below are the four macro pressures forcing change and what “good” looks like under each.

1) Velocity & Reliability: The DevOps Yardstick

Modern delivery is judged by DORA (deploy frequency, lead time, change failure rate, MTTR). Manual-heavy, script-fragile models can’t keep up with weekly/daily releases. AI-led QA stabilizes suites and keeps signals trustworthy as code changes, improving DORA without throttling developers.

What good looks like: diff-aware test selection, pre-sprint generation for critical paths, and CI/CD gates that fail on signal, not noise.

2) Toolchain Sprawl → Platform Preference

Gluing point tools increases fragility and overhead. In GitLab’s Global DevSecOps research, 64% of respondents want to consolidate their toolchains; among organizations already using AI for software development, 74% want consolidation, evidence of a shift toward platform-led QA that executes and reports in one place.

What good looks like: a single pane for coverage/flake hotspots/defects, native pipeline runners, artifacted evidence, and audit-ready exports.

3) The “Flaky Tax”

Flaky tests aren’t just annoying—they’re expensive. Google reports around 1.5% of test runs are flaky, and nearly 16% of tests show some level of flakiness; Microsoft’s internal system identified ~49k flaky tests and helped avoid ~160k false failures. Research finds ~75% of flaky tests are flaky at introduction—so early detection pays the highest dividends.

What good looks like: new-test flake detectors, clustering by root cause (timing, order, environment, selector), targeted re-runs, and trend reporting.

4) The CFO Lens: Cost of Quality

The Cost of Poor Software Quality (CPSQ) in the U.S. was estimated at $2.41T in 2022, with $1.52T attributed to accumulated technical debt, making QA efficiency a financial lever, not a back-office function.

Execs ask “How fast to green?” and “How many defects still escape?”- metrics AI testing improves by tightening signals and accelerating stabilization.

What good looks like: flake-adjusted coverage, escaped-defect rate, and regression-cycle cost on monthly scorecards.

Mini Comparison: Traditional QA vs. AI QA Testing

The Rise of AI Testing: Market Shifts in 2025

The tipping point for AI testing isn’t years away - it’s happening now. Multiple industry signals show adoption is accelerating:

- Gartner forecasts that by 2026, 80% of enterprises will adopt AI-augmented testing as part of their software delivery toolchain.

- Forrester notes that AI-driven self-healing and test generation can cut test maintenance costs by 50% or more, a relief for organizations where brittle suites consume most QA capacity.

- GitLab’s DevSecOps Report found that 74% of AI-using teams want toolchain consolidation, compared to 64% overall—evidence that AI doesn’t just add tools, it changes how teams structure their QA platforms

Early adopters are already reporting measurable returns. Bloomberg engineers shared that regression cycle time was reduced by 70% when flaky tests were clustered and stabilized with AI heuristics. In healthcare, providers have shifted HIPAA anomaly detection into AI-led QA pipelines, reducing compliance exposure by surfacing PHI-handling defects earlier.

It’s clear that AI testing isn’t a speculative bet as it’s becoming the baseline expectation for modern QA organizations.

Benefits of AI Testing for CTOs

For technology leaders, AI testing isn’t just about faster runs. It reframes how QA contributes to enterprise outcomes. Five areas matter most in 2025:

- Speed: Regression cycles that once took weeks can be reduced to days or even hours by generating candidate cases pre-sprint and pruning irrelevant runs via impact analysis.

- Coverage: Generative models propose edge cases drawn from API schemas and usage logs - cases humans rarely author, yet which often expose integration faults.

- Resilience: Self-healing locators and adaptive waits prevent pipelines from stalling on brittle selectors, keeping the “signal to noise” ratio high.

- Risk Reduction: Predictive defect analytics highlight modules most likely to fail, allowing teams to direct human reviewers where risk is greatest

- Cost Efficiency: Faster delivery and fewer flaky reruns reduce QA staffing overhead while improving developer throughput, directly impacting time-to-market.

For CTOs, these benefits align QA with board-level concerns: velocity, reliability, risk, and efficiency, all measured in DORA and cost-of-quality terms.

Flaky Tests: Causes, Costs, Fixes

No QA conversation in 2025 can avoid the “flaky tax.” If teams don’t trust the test signal, they won’t ship fast. AI testing changes the equation, but first, the mechanics:

Why Flakes Matter

Flakes inflate CI time, block merges, and corrode trust in automation. A study at Google showed 1.5% of all test runs were flaky, and nearly 16% of tests displayed some level of flakiness.

Microsoft engineers reported 49,000 flaky tests internally, which if left unchecked would have caused ~160,000 false failures.

Industry-wide, estimates suggest teams lose ~1.28% of developer time chasing flakes, equivalent to $2,200+ per developer, per month in large enterprises.

Root Causes

- Timing/async waits and race conditions

- Test order dependence across suites

- Environment drift: config mismatches, network instability, third-party service latency

- Selector fragility in modern front-ends

- Non-functional noise: performance spikes, CPU/memory contention

How AI Testing Fixes Them

- Detect at creation: Flagging unstable tests when first authored.

- Cluster by root cause: Timeouts vs. order vs. selector drift, with targeted fixes.

- Self-heal selectors & waits: Adjusting locators and calibrating waits; enforcing deterministic time control.

- Targeted re-runs: Rerunning only suspect clusters rather than full suites, keeping pipelines within SLOs.

This results in it restoring trust in CI/CD signals so developers can move at daily or hourly release cadence with confidence.

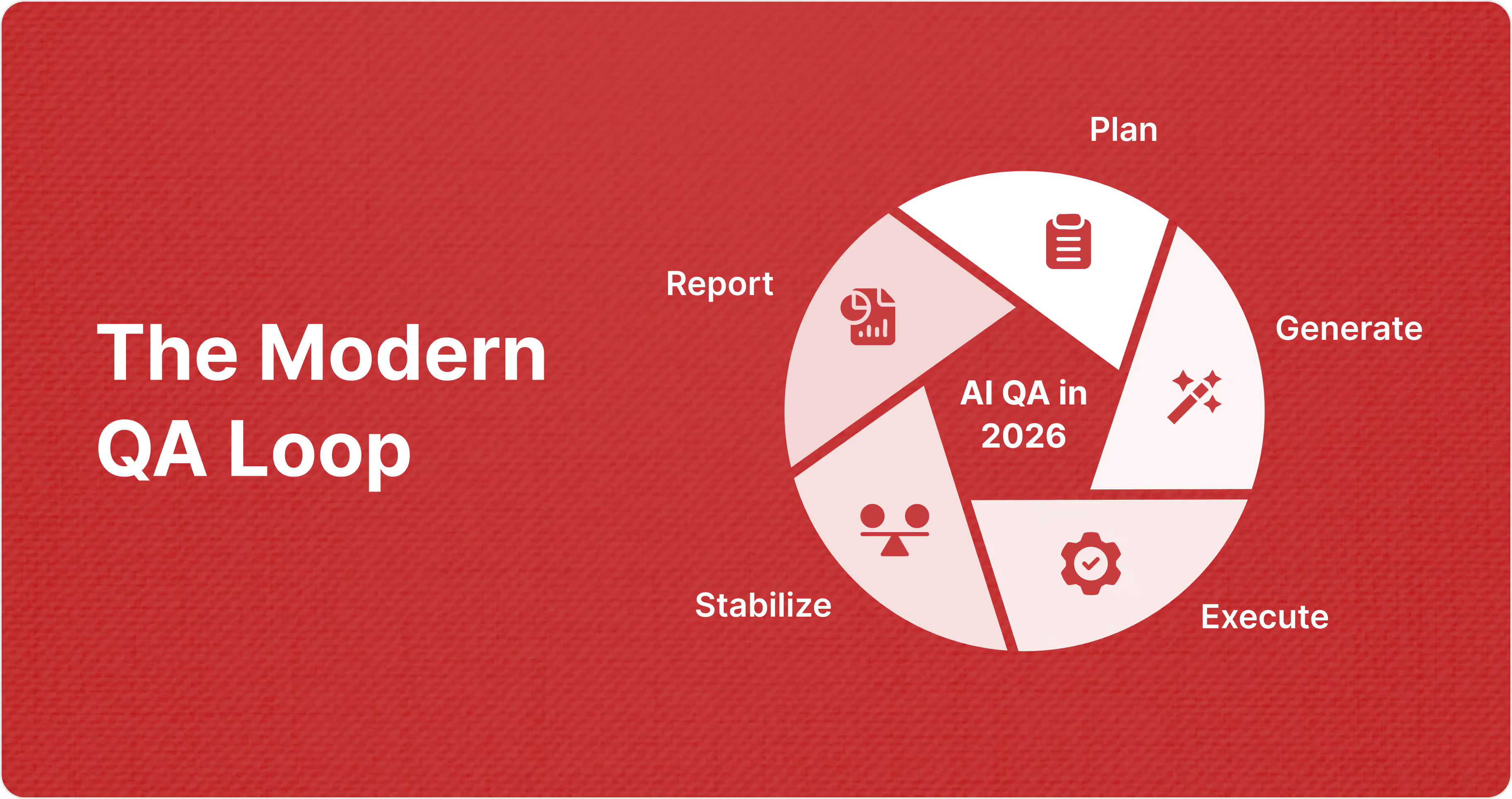

How AI Improves the QA Lifecycle (End-to-End)

The value of AI testing doesn’t come from one feature—it comes from the system. Every phase of the QA lifecycle is strengthened when intelligence is embedded directly into delivery workflows.

.png)

- Plan: Subject matter experts codify real user flows, compliance logic, negative paths, and edge conditions so the AI isn’t guessing. This upfront step produces a living model of “what must not break”, a shared artifact that aligns business, compliance, and engineering priorities.

- Run: Tests execute inside the pipeline in GitHub Actions, GitLab CI, Jenkins, or Azure DevOps, rather than as a detached stage. Parallel sharding, artifact uploads, retry budgets, and smoke gates are tuned to the risk profile of each service, so the cycle time stays predictable.

- Stabilize: New or changed tests are flagged for flake detection. Failures are clustered by signature (timeouts, order, environment drift, selector fragility) and self-healing locators reduce noise. The result: leaner, more stable suites that don’t pollute signals.

- Report: Dashboards map QA signals directly to DORA metrics (deploy frequency, lead time, change failure rate, and MTTR) along with flake-adjusted coverage and escaped-defect counts. Instead of raw pass/fail logs, leaders get actionable insights tied to delivery outcomes.

Together, these phases create a closed loop: AI proposes, humans validate, pipelines execute, AI stabilizes, and dashboards feed outcomes back into planning.

How AI Testing Moves DORA (With Mechanisms)

Executives measure engineering by DORA metrics. AI testing influences each lever not by “magic,” but by concrete mechanisms.

- Deployment Frequency ↑

Cleaner signals and diff-aware prioritization keep pipelines green. By suppressing false failures and focusing runs on impacted code, teams can release smaller, safer increments more often. - Lead Time ↓

Pre-sprint test generation reduces scripting overhead. Self-healing locators minimize rework. The combined effect shortens the time from commit to production-ready. - Change Failure Rate ↓

Flake clustering surfaces true regressions early, while suppressing noise. With fewer false reds slipping into releases, bad deploys become less frequent. - Mean Time to Recovery (MTTR) ↓

When failures do occur, artifacted logs, videos, and clustered signatures speed diagnosis. Teams can pinpoint whether an issue is environmental, code-related, or flaky, cutting time-to-green and recovery windows.

The end result is a QA function aligned to business value. When DORA improves, so does developer productivity, release confidence, and ultimately customer experience.

Risks, Limits, and How to Avoid Hype

Every engineering leader wants faster green builds, but not every promise in the AI testing market survives contact with a real CI/CD pipeline. To separate value from hype, focus on four areas:

- Autonomy hype: Vendors may claim near-total test automation. In practice, the right measure is % of candidate tests auto-generated per sprint, with inputs (requirements, APIs, traffic logs) and review gates clearly documented. Without transparency, “70% automated” is just a marketing number.

- Sprawl disguised as AI: Adding plugins is not progress. Teams already facing toolchain fatigue should remember that consolidation is a top leader priority - 74% of AI-adopting teams want fewer tools, not more. The real question: does the AI capability execute within your pipeline, or does it add yet another detached step?

- Ignoring flake economics: Raw pass rates hide the problem. Without flake-adjusted coverage, QA leaders can’t distinguish between true stability and suppressed noise. The cost of chasing false failures, both in engineering hours and blocked releases, can outweigh any apparent automation gain.

- Governance blind spots: AI-generated tests still handle sensitive data. A robust plan for test data management, masking/synthesis, PII/PHI handling, data residency, RBAC, approvals, and audit trails is non-negotiable in regulated sectors. Without it, compliance exposure can erase the value AI promised to deliver.

Challenges and Myths Around AI for QA

Like any emerging technology, AI in QA attracts both optimism and skepticism. Some common myths deserve a reality check:

- “AI will replace testers.”

Testers evolving into AI supervisors. The role shifts from authoring scripts to validating AI outputs, setting guardrails, and curating domain-specific logic. - “AI is a black box.”

Explainability and auditability are improving rapidly. Modern AI testing platforms log inputs, outputs, model versions, and reviewer decisions, giving QA leads the visibility they need for audits. - “Integration is seamless.”

Legacy QA habits can create friction. Embedding AI testing into existing CI/CD pipelines requires cultural change as much as technical adoption. Teams must budget for process realignment. - “Transition costs are negligible.”

The short-term lift (training, integration, and governance setup) is real. But pilots consistently show that long-term ROI (faster releases, leaner staffing, lower defect escape rates) outweighs the initial cost.

AI testing is not a silver bullet. It is a force multiplier, if teams approach it with realism and rigor.

Best Practices for Adopting AI Testing in 2025

Adoption is less about buying a tool and more about structuring the journey. Enterprise teams in 2025 should focus on five practices:

.png)

- Start small, then scale.

Begin with regression or API testing high-value domains where flaky debt and coverage gaps are most visible. Use early wins to build credibility. - Blend AI into CI/CD, don’t silo it.

QA must live inside delivery pipelines. Integrations with GitHub Actions, GitLab, Jenkins, or Azure DevOps should be first-class, not bolted-on jobs. - Retrain testers as supervisors.

Shift the QA skill set from script authorship to AI oversight: prompt curation, output review, edge-case validation, and governance monitoring. - Select vendors carefully.

Demand explainability, compliance readiness, and scalability. Ask for proof of audit logs, RBAC, synthetic data flows, and case studies relevant to your sector. - Track the right metrics.

Move beyond raw test counts. Monitor defect escape rate, flake-adjusted coverage, regression cycle cost, CFR (change-failure rate), and time-to-green. These tie QA back to DORA and board-level KPIs.

By following these practices, enterprises can adopt AI testing with eyes wide open, gaining speed and stability without falling prey to hype or hidden costs.

Qadence: The Future of AI Quality Assurance

The future of QA is already in motion. Qadence turns the principles outlined above into a working model inside enterprise delivery pipelines, combining compiler-grade analysis, Gen AI agents, and human-in-the-loop governance to make AI testing practical, predictable, and compliant.

How Qadence Works

Qadence is built around an agentic QA architecture designed to operate natively within CI/CD.

- Analyze: Dependency and code graphing map what changed across commits, identifying the exact impact zone for testing.

- Generate: Gen AI agents create regression and integration candidates from requirements, OpenAPI specs, and commit diffs, while SMEs review and refine critical and compliance-sensitive paths.

- Execute: Tests run directly within GitHub, GitLab, Jenkins, or Azure DevOps. Diff-aware selection and parallel runs keep cycles short and deterministic.

- Stabilize & Govern: Self-healing locators, flake clustering, and masked or synthetic data pipelines ensure stability, transparency, and compliance across environments.

This closed-loop system keeps QA in lockstep with development—always current, always governed.

What Teams Gain

- Speed without sprawl: Spin up in a day; no separate toolchain, dashboards, or plugins.

- Higher coverage: 40–70% of regression candidates generated automatically, validated by SMEs.

- Reliable signals: Flake detection and self-healing reduce false reds, keeping confidence high.

- Continuous compliance: Every generated test, fix, and data flow is logged and reviewable for audit readiness.

With Qadence, AI testing becomes less about automation claims and more about operational confidence, a system that stabilizes itself, scales across releases, and reports in language leadership already tracks.

What’s Next?

It’s worth noting that AI-led QA is not the finish line but the foundation. The next horizon is autonomous testing, where systems adapt dynamically as applications evolve:

- Predictive QA: Models anticipate coverage gaps and generate new tests as business flows change, without waiting for manual inputs.

- Continuous learning: Test suites improve with every release, incorporating production telemetry and past failures to refine coverage.

- From cost center to differentiator: QA shifts from being a reactive safety net to a strategic asset that accelerates delivery, strengthens compliance posture, and improves customer trust.

The path forward is not “more automation”. It’s smarter, self-improving QA ecosystems, always governed, always explainable.

Conclusion: Why 2025 is the Year to Move Beyond Traditional QA

By 2025, manual-heavy, script-fragile approaches cannot keep up with enterprise demands for velocity, compliance, and reliability.

What AI testing offers is a practical response to structural pressures:

- Weekly or daily releases judged by DORA outcomes.

- Rising compliance obligations around PII, PHI, and auditability.

- The cost of poor software quality, now measured in trillions.

The enterprises that act now will exit 2025 with leaner QA teams, stronger release confidence, and measurable ROI.

Where Qadence Fits

Qadence operationalizes this shift by combining:

- Compiler-grade analysis for dependency mapping and diff-aware test selection.

- Gen AI agents for candidate generation, self-healing, and flake clustering.

- Human-in-the-loop reviews to ensure domain-critical coverage and compliance alignment.

The result: faster time-to-green, lower flake debt, and dashboards tied directly to DORA.

Next Step: Automate Your First Test Case, Free

Experience how Qadence works in your own environment with no setup, no contracts, and no cost.

Submit a single test case from your backlog, and we’ll automate it end-to-end within your existing CI/CD pipeline, including generation, execution, and stabilization.

Here’s what’s included:

- One real test case automated at no cost, built and executed by the Qadence platform.

- CI/CD-native delivery using your current setup (GitHub, GitLab, Jenkins, or Azure DevOps).

- Comprehensive output: Working test, stability summary, and insights into automation scalability.

- Zero-risk evaluation: If you find value, we’ll explore scaling; if not, you still keep the automated test and results.